The Vocera Analytics installation application provides you the capability to run the Data Migration for Engage Analytics process.

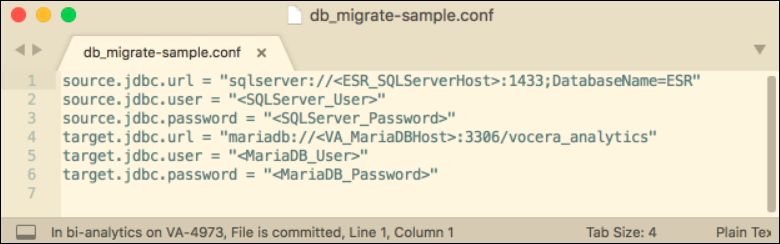

Configuring db_migrate.conf

To configure the Engage Analytics migration source and target for db_migrate, perform the following tasks:

- Navigate to the installation directory of VoceraAnalytics>DataMigration directory.

- Create a copy of the file db_migrate-sample.conf and rename the copy as db_migrate.conf.

- Open the configuration file db_migrate.conf using an editor.

-

Provide the URL, username, and password for the source and destination,

replacing the placeholders in the file that are enclosed in angle brackets.

Note: Do not include angle brackets around the values, but ensure the values are enclosed in double quotes.The following screenshot displays the sample db_migrate.conf file.

Note: All strings must be enclosed in double quotes. If backslash or double quotes are used in a string, it must be properly addressed. Failure to follow these instructions will lead to an error and failed migration if the string contains a special character (as often required by password policy), a backslash, or a double quote.

Note: All strings must be enclosed in double quotes. If backslash or double quotes are used in a string, it must be properly addressed. Failure to follow these instructions will lead to an error and failed migration if the string contains a special character (as often required by password policy), a backslash, or a double quote. -

Save the modified db_migrate.conf

file.

Note: All string values (on the right side of equals sign) must be enclosed in double quotes. If a backslash or double quotes occurs in a string value, it must be escaped with a backslash.

For example, the string "\\\"" in a configuration file would produce a single backslash and a double quote. Failure to follow these instructions will result in an error and failed migration if the string contains a special character (as often required by password policy), a backslash, or a double quote.

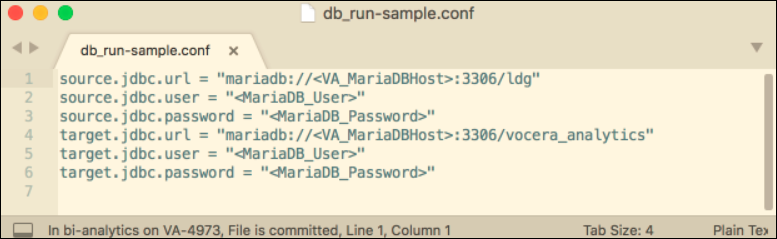

Configuring db_run.conf

To configure the Engage Analytics migration source and target for db_run.conf, perform the following tasks:

- Navigate to the installation directory of VoceraAnalytics>DataMigration directory.

- Create a copy of the file db_run-sample.conf and rename the copy as db_run.conf.

- Open the configuration file db_run.conf using an editor.

-

Provide the URL, username, and password for the source and destination,

replacing the placeholders in the file that are enclosed in angle brackets.

Note: Do not include angle brackets around the values, but ensure the values are enclosed in double quotes.The following screenshot displays the sample db_run file.

Note: All string values (on the right side of the equals sign) must be enclosed in double quotes. If a backslash or double quotes occurs in a string value, it must be escaped with a backslash.

Note: All string values (on the right side of the equals sign) must be enclosed in double quotes. If a backslash or double quotes occurs in a string value, it must be escaped with a backslash.For example, the string "\\\"" in a configuration file would produce a single backslash and a double quote. Failure to follow these instructions will result in an error and failed migration if the string contains a special character (as often required by password policy), a backslash, or a double quote.

- Save the modified db_run.conf file.

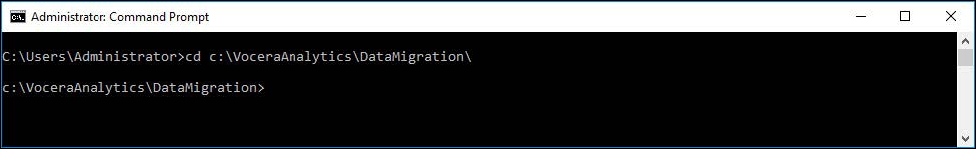

Executing the migrate.cmd Windows Batch File

To execute the Data Migration process, perform the following tasks:

-

Insert a row with VMI client name in dimvmiclients table, to

avoid duplication of data.

For example, if Engage-VS is the client, insert Engage-VS to the dimvmiclients table.Note: Duplication of data will occur in a set up where Engage alarms or alerts are sent through VMI to Voice server.

-

Open a command prompt window (cmd.exe) and change the

current directory to the DataMigration directory in the

Vocera Analytics installation directory.

The following screenshot shows the DataMigration directory in command prompt:

-

Type migrate and press Enter.

The data migrates from the source database to the target database.

-

Run PostMigrationCleanup.bat located at

<InstallDir>/VoceraAnalytics/AnalyticsServer/ to

clear plain-text passwords from the configuration

file.

This step ensures that the credentials of VMP database and Vocera Analytics database are protected.